Today, at the China Wuzhen Go Summit Artificial Intelligence Summit, AlphaGo's father, DeepMind founder Demis Hassabis and DeepMind chief scientist David Silver revealed important information about AlphaGo and what did AlphaGo mean? So that people can learn more about the secret behind AlphaGo.

What is AlphaGo?

AlphaGo is one of the first players to beat the Human Professional Goers and beat the Go World Championships. In March of 2016, AlphaGo went through five games in the world with more than 100 million viewers, culminating in a 4 to 1 total victory over Go World Shakespeare, an important milestone in the field of artificial intelligence. In the past, experts have predicted that artificial intelligence will take ten years to overcome the human professional players, after this game, AlphaGo by virtue of its "creative and witty" under the law, among the highest professional title - professional nine Ranks the history of the first to obtain this honor of non-human players.

How does AlphaGo train?

All along, Go is considered to be the most challenging item in traditional games for artificial intelligence. This is not just because Go contains a huge search space, but also because the evaluation of the location of the child is far more difficult than the simple heuristic algorithm.

In response to the great complexity of Go, AlphaGo uses a novel machine learning technique that combines the advantages of supervising learning and strengthening learning. Through training to form a policy network, the situation on the board as input information, and for all feasible sub-location to generate a probability distribution. Then, train a value network to predict the self-cue to predict the outcome of all available drop positions with a standard of -1 (absolute victory for the opponent) to 1 (AlphaGo's absolute victory). Both networks are very powerful themselves, and AlphaGo integrates these two networks into probability-based Monte Carlo Tree Search (MCTS), which realizes its real advantage. Finally, the new version of AlphaGo produces a large number of self-chess game, for the next generation version provides training data, the process of recycling.

How does Alphago decide to fall off?

After getting the chess game information, AlphaGo explores which location has a high potential value and a high probability based on a strategy network to determine the optimal placement. At the end of the assigned search time, the most frequently visited location in the simulation process will be the final choice for AlphaGo. After the early exploration and the process of the best Lazi constantly try to figure out, AlphaGo search algorithm will be able to add in its computing power to the human intuitive judgment.

History of AlphaGo

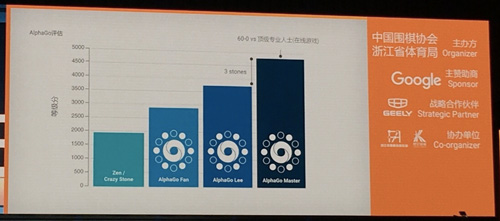

DeepMind divides AlphaGo roughly into several versions:The first generation, is defeated Fan Jia Alpha. And Zen / Crazy Stone before the Go software compared to the chess force to be higher than four children.The second generation is defeated by Li Shishi's AlphaGo Lee. Compared with the previous generation, chess strength higher than three children.The third generation, Ke Jie is now the opponent, but also the beginning of the 60-game winning streak: AlphaGo Master. Compared to defeated Li Shishi version, chess force again raised 3 son.

The new version of AlphaGo than the old version of AlphaGo to "strong three sons" to Kejie very surprised, Jiang Zhujiu and Rui Naiwei deliberately find Hassabis to confirm the "three sons" in the end what is the meaning of Hassabis made it clear that the first on the board Three sons. Rui Naiwei privately said he was willing to let three with AlphaGo a war. The following are the same as the "

AlphaGo Lee and AlphaGo Master are fundamentally different.

The new AlphaGo Master uses a single TPU operation with a stronger strategy / value network, with a one-tenth of the previous generation of AlphaGo Lee due to the application of more efficient algorithms. So a single TPU machine is sufficient to support.

The old version of AlphaGo Lee is calculated using 50 TPUs. Each search is followed by 50 steps and the calculation speed is 10,000 positions per second.

As a contrast, 20 years ago to beat Kasparov's IBM Deep Blue, you can search for 100 million locations. Silva says AlphaGo does not need to search for so many locations.

According to public information speculation, the AlphaGo2.0 technical principles and before have a huge difference:

1. To give up the supervision of learning, no longer use the 30 million Bureau of the game to train. This is AlphaGo's most dazzling algorithm, but also today the mainstream machine learning the inevitable core conditions: rely on high-quality data, in this particular problem so once again broke through.

2. Abandon the Monte Carlo tree search, no longer violent calculations. In theory, the algorithm is more stupid, the more the need for violence to do the calculation. The clever algorithm can greatly reduce the calculation of violence. From AlphaGo 2.0 "vest" Master's historical behavior, the game is very fast, about every 10 seconds to take a step, so the speed is likely to give up the calculation of violence.

3. greatly enhanced the role of enhanced learning, before the edge of the drum algorithm, the official to carry the main force. Think about how inspirational: two idiot machines, follow the rules of chess and winning rules, starting from the random start day and night, sum up experience, continuous criticism and self-criticism, one week after the final.

Under such an algorithm, AlphaGo 2.0 is very computationally expensive, and the current chess game is input to the neural network. The current flows and the output is the best game. I guess that under this algorithm, it is possible to rely solely on a GPU to work, and each move of chess consumes energy close to the human brain.

In other words, DeepMind's goal is to build generic artificial intelligence. The so-called general artificial intelligence, first AI have the ability to learn, followed by one by one, the implementation of a variety of different tasks. How to reach this goal? Hassabis says there are two tools: depth learning, reinforcement learning.

AlphaGo is the combination of deep learning and enhanced learning. AlphaGo is also a step towards DeepMind's goal toward generic artificial intelligence, although now it's more focused on Go.

Hassabis said it hoped that through AlphaGo research, let the machine get intuitive and creative.

The so-called intuition here is through the experience of direct access to the initial perception. Can not be expressed, through the behavior to confirm its existence and correct.

No comments:

Post a Comment